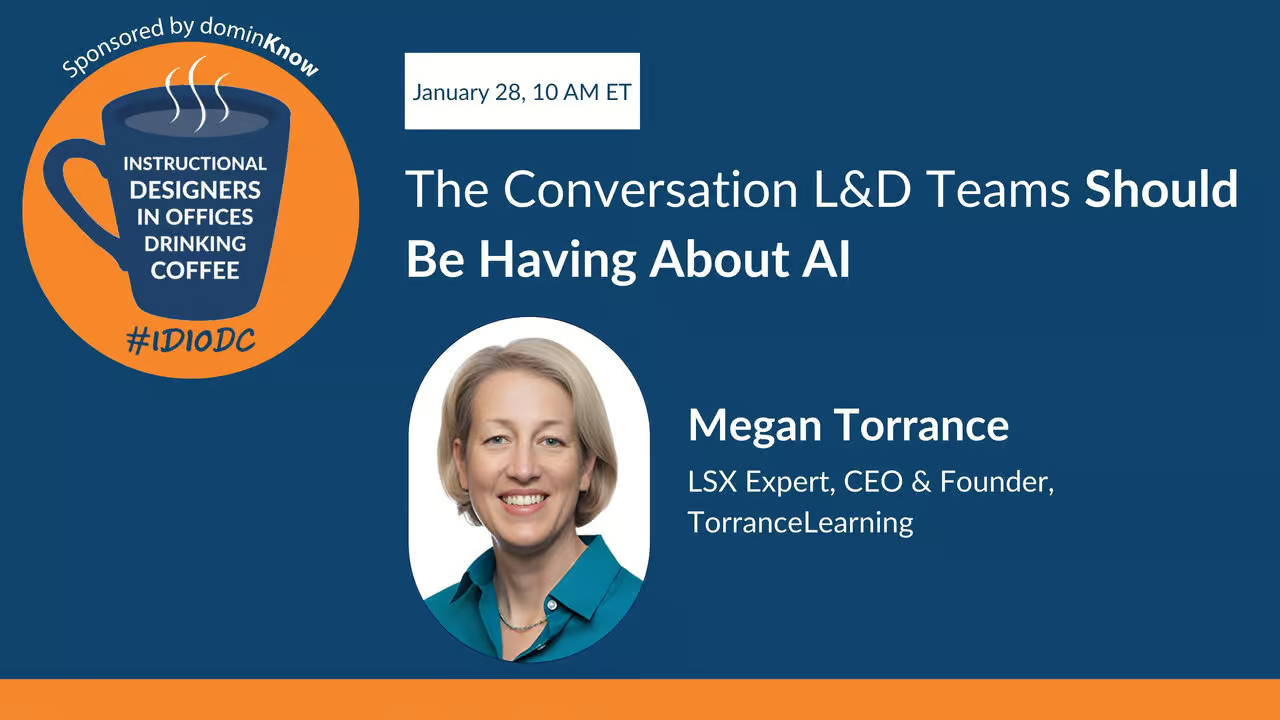

IDIODC #262: The Conversation L&D Teams Should Be Having About AI

Hey, everybody. Welcome to Wednesday. Megan Torrance is here with us today. Megan, there may be folks joining us today who haven't encountered you. Introduce yourself to them.

I lead TorranceLearning, a group of learning design, development and engineering professionals. We help clients solve performance challenges through learning and support. We also help fellow L&D professionals improve their skills in agile project management, data analytics, XAPI, and AI.

The Genesis of the AI Implementation Guide

This comes from helping L&D professionals level up. I wrote a book on data analytics that went to press two weeks after ChatGPT was released. By the time it hit shelves, nobody cared about data analytics. Everyone was doing AI party tricks. I realized this was an opportunity for learning professionals to step in and help organizations navigate this hard place. The spark for the book is how we implement AI, keep humanity involved, and have something durable and reasonable.

L&D's Unique Position: Power Without Authority

Learning and development does not have authoritative power in organizations. The CLO rarely gets promoted to CEO. But we have other sources of power. L&D people have broader, more vertically oriented networks than others because we talk to subject matter experts, learners, leadership. We have access to all these people. Our job is figuring out new topics enough to teach them. We think through processes. We come with classroom management and facilitation skills.

The AI Implementation Canvas: 14 Conversations

All of that bakes into what I call the AI Implementation Canvas. It's 14 different conversations to have in the organization about AI. Some conversations are obvious for L&D to lead, but we can and should participate in many others, sometimes just facilitating.

Three Zones of AI Impact

L&D is juggling a lot. We call them zones of impact. Zone one is your own productivity tools - Claude, ChatGPT, Gemini, Copilot, Midjourney. The output is still the same: XAPI course, MP4, image, infographic. Zone two is when what I put in front of the learner is a direct AI interface - chatbot, performance coach, role play, AI-driven curriculum. There's a different duty of care. Zone three is when something in the business changes and L&D must react because people need different skills or we need different people. Or the process changed and we teach people the new process. There's actually a fourth zone - helping our leaders.

Whenever you're making something somebody else consumes, you have zones one, two, and three. HR making something for employees has all three zones. Finance releasing smart dashboards has three zones.

Strategic Foundations: The First Four Conversations

The 14 seems like a lot, but I group them into four sets. Strategic foundations includes business alignment and value proposition. What are we expecting? Is it aligned with our business process, strategy, competitive marketplace? There's regulatory and legal environment - AI and data protection. Even without AI regulation, you may have GDPR data protection. AI can influence existing regulation. There's workforce impact and talent planning. Then AI readiness - do we have processes defined? Good data? Security around data so we're not crossing streams? It's one thing to point AI tools at your entire SharePoint directory, but what else is in there?

AI Readiness: Disaster Preparedness and Kill Switches

Disaster preparedness. Are we prepared to handle errors and mistakes? We're releasing an AI practice module. We've set up an audit trail, catching all data - everything a person says and everything AI says back. We have processes for vetting the data stream, watching what people do. We built a kill switch to quickly turn off the AI. We have a backup - prescripted responses like every other e-learning. These are different kinds of readiness. A hypercare environment after we release a course that we otherwise wouldn't have.

Most organizations think AI will save time, money, reduce staff. But you've opened a can of worms about things that still need care and accommodation. It's critical if someone is taking that responsible perspective.

Technology Infrastructure and User Experience

On the canvas, technology includes the experience. User experience in the workflow. I heard stories where somebody brought in an AI chatbot to support call center folks. They had to ask it nicely and structure the ask rather than quick keyword searches. It actually slowed them down and didn't get better answers. A technology-driven solution that doesn't consider people is a problem. Then there's governance. I hear banking people say they don't get cool tools. I'm like, you have my money. I'm glad Grok isn't giving advice about my money. The implementation canvas doesn't have answers. It's a book full of questions. What conversations should you have? Answers change as technology changes, organization to organization, industry to industry.

Data Governance and Responsibility

If we're collecting and watching, if we see something alarming, what is our responsibility? When doing backend testing, I put in alarming content, realizing my team would see what I came up with. There's additional duty of care. This is zone two where we're putting AI tools in front of people.

Design and Implementation Enablers

This touches the third part of the canvas - design and implementation enablers. Organizations are getting good at experimentation pilots. But once you get the green light, what does scaling look like? We built an experience but to scale it, we had to change the LLM it used, which meant our prompting changed. They speak different dialects. We needed to think differently going from pilot to scale. You can't just send the link to everybody. That's how you run into problems. Then there's measurement and impact. What do we want? How do we measure it? You can measure how often people use a tool, hours spent, license usage. Sam Rogers measures competence and capability - are you managing risk? Engaging? Critically reviewing results? Making adjustments?

You and Chris and I have been having this conversation since XAPI days. All of a sudden you're collecting more information. Is XAPI too big brotherish? We had no idea what was coming. With great data comes great responsibility. 10 years ago people said if we get IT or legal involved, this will never get done. Please don't call IT. Now, because of the data, scale, and impacts, if you don't talk to your IT and legal people, it will never get done. They should be your best friends. Make them heroes.

Human-Centered Adoption and Change

IT, legal, and then the business. If you don't understand your business processes, you risk spinning brain cycles on things that won't move the needle and solve real problems. The fourth quadrant is human-centered adoption and change. How do we have bias mitigation and human protection conversations? Stakes are higher. How do we look at change management and adoption? L&D is often the last mile of change management. We have AI literacy and upskilling. I've often seen AI literacy as risk management, a compliance effort. Compliance friends know how to make sure everybody does it. They get good blanket coverage. It's not enough though. This gives us opportunity to not just crank out e-learning. How do I apply this and get support on a regular basis? How do I build a community around learning this? Then social engagement. How do we keep connections between people? We're in a great position to ask that question.

Bias Mitigation and Inclusive AI

Often, people who can least see bias are ones who benefit most from it. You don't see the systems in which you operate successfully as systems or constructs. It just seems natural. That's how our brains work. We don't think excessively about things that seem to be working. When I'm able to switch conversations - it's garbage in, garbage out. Do you want to make decisions based on bad information? Nobody wants that. The bias conversation is one where what's important is the conversation. Research shows different models fan out differently on ideology perspective. Knowing that is helpful to having conversations. Looking around a room as you're making decisions - who's not here? The people who use the system. Maybe we should include them. They are affected stakeholders and ought to be engaged.

In our ethical and responsible use framework, one element is inclusive data. Not DEI kind of inclusive exclusively. Just good data coming in. Five years ago how did I solve for coming from a white Gen X middle class female Ivy League educated MBA perspective? I talk to other people from different perspectives. I ask them for feedback on my work. Same thing when I have AI generated output - get perspectives from other people. I can also ask my AI tools to take the perspective of someone who has a different perspective. That's an interesting proxy, probably not sufficient.

AI's Opportunity: Personalization and Reach

The opportunity to personalize learning and support people you maybe weren't able to reach. Maybe I couldn't offer a beginner's version or non-English version. I needed a language spoken by far fewer people at a reading level others didn't have. I now have the ability to do that and reach those audiences in ways that weren't possible. It's not the answer because the answer keeps changing. It's the conversation we're having and the possibilities it opens up. Ian Newland told me at DevLearn they used to build training for certain groups. He had a group of four people in an eastern European country who'd never had their own stuff. Now they could. That's pretty cool.

Surprises and AI Literacy Gaps

I remember being in a meeting with senior leaders from a client organization about particular AI use. Everybody was super excited. They were smart people excited about what I was doing, so I was flattered. They started asking questions about AI capabilities that made me realize I need to support them with AI literacy and state of the technology in a very gentle way. I couldn't say you're idiots or you've been under a rock. That was a good reminder that people who show up and have conversations like we're having tend to be more AI literate than decision makers or stakeholders in their organization. We'll have to make sure we're paying attention and bringing everybody along. Otherwise, we're not gonna get the good decisions we need made.

Upcoming Webinar and Book Launch

On March 3rd at 11:00 AM Eastern, Megan's joining us for an L&D Professionals Guide to AI Strategies that Deliver Results. We are all tasked with results in this industry. At the top they're saying we must have AI. That doesn't mean they're experts. They just decided that's the direction. What do they want? Results. You've talked about so many things that tie into results. Everything impacts results, making them better or worse.

The book is coming out in June. If you're at the ATD conference in Los Angeles in May, you'll get it early. There'll probably be a couple of sessions at the ATD conference.

IDIODC Instructional Designers in Offices Drinking Coffee is brought to you by dominKnow. We empower L&D teams to develop, manage, and deliver impactful learning content at scale. Megan, thank you so much. This is as always an awesome conversation. It's particularly timely. Having the conversation is the key thing right now.

.svg)