eLearning ROI: How to Evaluate the Success of an eLearning Project

These days, every L&D department understands the need to prove the value of their work to their executives.

The need to justify training costs is especially important for bespoke eLearning solutions. With the rise of off-the-shelf eLearning, the big bosses want to know that the results justify an investment that may not strictly be necessary if the generic courses do the job just as effectively – and for much less money.

One popular evaluation method is to calculate the return on investment for eLearning – but how do you make a robust calculation of eLearning ROI? What do you leave out and put in? And most importantly…

eLearning ROI can be your secret weapon for proving that the benefits of training development outweigh the costs. It's a hard metric that executives from all industries value because it goes straight to the bottom line. It translates many complex factors into one easy-to-understand number: the program's profitability.

However, no one deploys their secret weapon at every opportunity.

Patti Phillips of The ROI Institute says that eLearning ROI is only necessary for 5-10% of training programs. The process requires an investment of time and money that simply can't be justified for most run-of-the-mill eLearning projects.

Instead, you should save ROI calculations for training programs that require significant investment. Especially programs that are getting a lot of attention from upper management.

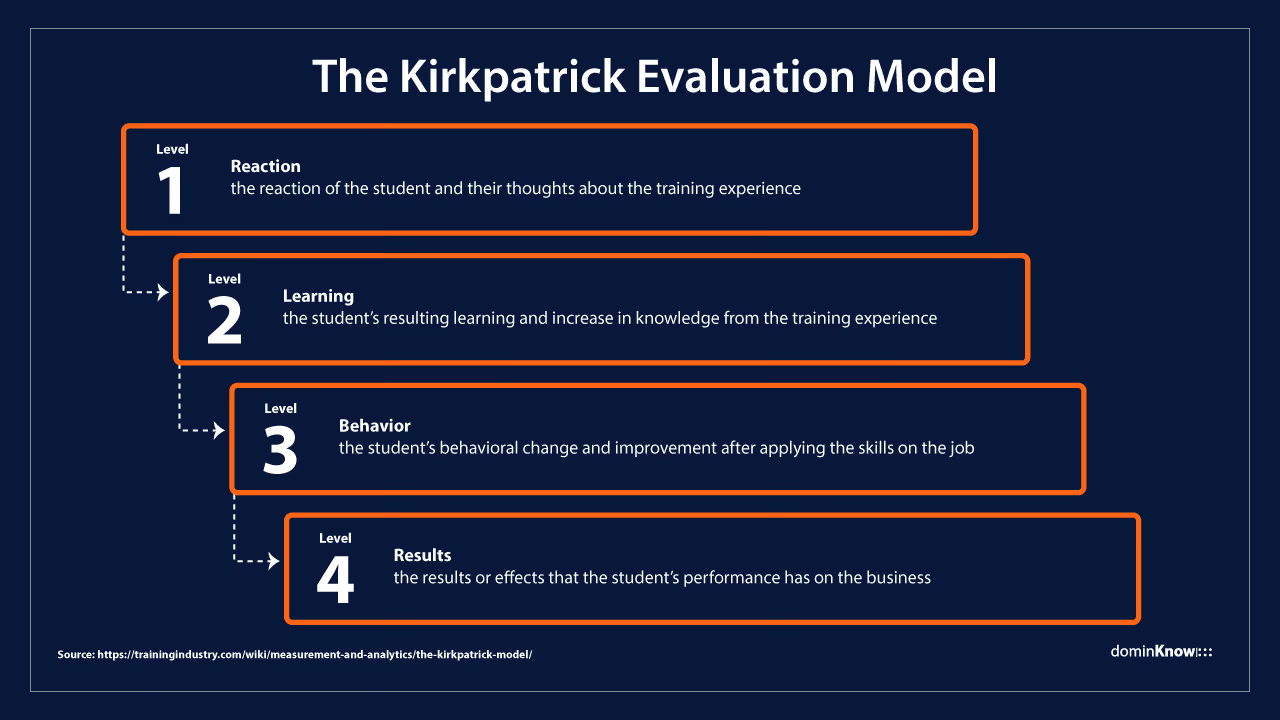

Luckily, eLearning ROI isn't the only method for evaluation. You'll sometimes hear about the Kirkpatrick Model's five levels, but truthfully, these levels come from two different models. Before we talk about eLearning ROI, let's look at the four other evaluation levels and when they're appropriate.

The Kirkpatrick evaluation model, in its original form, didn't directly address eLearning ROI. Rather, it provided a framework for evaluating the effectiveness of a training program at four levels.

Level 1 of the Kirkpatrick Model focuses on learner reactions to your training. The measuring tool is a post-training survey or "smile sheet."

The idea behind this reaction level is to make sure you have enough learner buy-in – something that's critical when you're teaching adults. If the UI is distractingly difficult, or the level of the material is perceived as too easy or too hard, or the subject matter is not directly useful, your learners may choose not to finish the course.

A reaction-level evaluation won't impress the C-suite, but that doesn't mean it isn't worth doing. It's a good way to gather feedback from your learning audience that you can use to inform your instructional design strategy.

Level 2 of the Kirkpatrick Model focuses on how your eLearning program increases learners' knowledge and/or skills. The measuring tools are quizzes, tests, and other evaluations, including pre-course, in-course, and post-course assessments.

These metrics are important for determining whether an eLearning project meets its learning objectives, as well as finding knowledge gaps that aren't currently being closed.

Pre-tests, while not essential, can provide an important piece of the puzzle. After all, a high test score is irrelevant to the value of an eLearning course if your learners knew the answers before they began. With the right authoring tool, you can even let those people test out of the course and even track practice questions, with the help of xAPI.

Even if your learners understand the concepts and retain the knowledge from a course, you may be falling short of your goals if they don't apply what they learned. That's why behavior is the focus of Level 3 in the Kirkpatrick model.

This level is the first real step that will matter to your executives. The Kirkpatricks themselves have called Level 3 the "missing link" between learning and results – underappreciated and often ignored.

Still, while experts recommend regular evaluation of eLearning at Levels 1 and 2, they say Level 3 should only be applied periodically. That's because behavior evaluations are more logistically challenging than a survey or quiz. Since the measurement involves those outside the L&D team, you need the cooperation and buy-in of learners, direct managers, and other stakeholders. Not only do they have the power to monitor for changed behavior, but they also have the influence to discourage or encourage the change.

Additionally, it's necessary to plan Level 3 evaluations before eLearning development for two reasons – so that the training itself is engineered to drive the necessary change and so that you can prepare a sensible evaluation method. Additionally, you need to establish baselines for behavior before training, and post-training evaluations for behavior have an extended turnaround. They should take place at least a month after training is complete – more likely, two or three.

Due to the extra coordination and effort, you should reserve behavior-level evaluations for training initiatives that address behavior critical to the performance of a team or department. In other words, apply it to behaviors that will directly impact a key performance indicator (KPI).

When the behavioral change needs to be broad, across multiple departments, audience targeting of at least some modules will be a better approach than a universal course – that way, you can address the specific changes required for each role.

Measuring tools for behavioral change can be quantitative or qualitative, including on-the-job observations, productivity or efficiency metrics, and interviews with direct supervisors and managers.

If you're going to invest in Level 3 evaluation, it also makes sense to set up measures that will provide accountability and support for the change. This may include monitoring behavior and tracking individual performance, providing job aids and coaching, or making the behavior a part of their performance review.

In the Kirkpatrick model, the impact of training on key performance metrics is the pinnacle of the pyramid. It focuses on whether or not your eLearning project produced a significant change in KPI.

While the first three levels focus on how training affects the individual, Level 4 assessments are where we expand our view to eLearning's impact on the business as a whole. How does the learners' changed behavior impact sales numbers, new accounts, customer satisfaction scores, compliance infractions, or turnaround times?

There may be multiple KPIs that change as the result of a single training program. Consider and account for all the possibilities – learners and their direct supervisors may be able to identify effects that you miss.

Sometimes ROI calculations get lumped into Level 4 of the Kirkpatrick model, but traditionally this step is for non-monetary measurements. eLearning ROI calculations require additional steps and information to turn KPI deltas into a monetary return, so it makes sense to envision them as discrete steps.

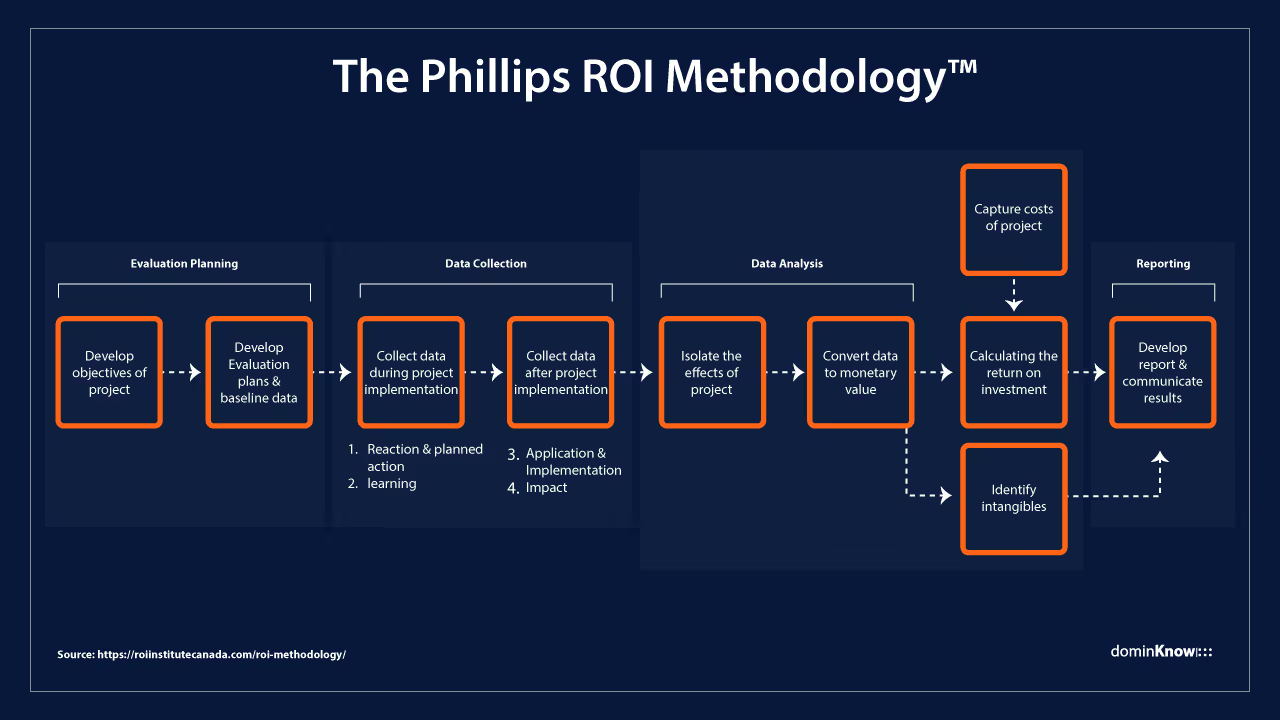

The Phillips evaluation model builds on the Kirkpatrick model in a few ways. It also fleshes out certain concepts to provide a methodology for planning your evaluation, gathering data, analyzing results, and reporting to multiple audiences.

We recommend reading the latest edition of The Bottomline on ROI by Patricia Pulliam Phillips for the whole picture.

For the purposes of this article, we're just going to focus on a few key differences between the Phillips and Kirkpatrick models: the two additional levels that support the calculation of eLearning ROI, the need to isolate training effects from other potential sources of change, and the framework for intangible benefits.

To state the obvious: to calculate a return on investment, you need to calculate both the investment and the return. Level 0 was added to account for training costs.

Include direct and indirect costs of the training program, from planning and development to rollout and evaluation. Remember to include things like eLearning development costs, employee time to complete the training, software tools, new equipment, awareness campaigns, the cost of determining ROI itself, and the like. Prorate general L&D costs for that specific program, as appropriate.

The Phillips Model also added Level 5 for calculating ROI.

First, you convert all KPI impacts from Level 4 into dollar amounts. For example, an increase in sales translates into extra sales profits or a reduction in employee turnover translates into savings from recruitment and onboarding.

Add these up to find the total revenue associated with your training program, then calculate ROI:

ROI (%) = ((Total Revenue – Total Cost)/Total Cost) x 100

Typically, ROI is calculated per year. A positive number means that the return was greater than the investment. A negative number means that the investment was greater than the return. Zero would mean it's a wash.

Levels 3 and 4 of the Kirkpatrick Model look at changes in behavior and KPI but assume that all change is attributable to training.

The problem? Businesses rarely try one solution at a time in the face of a problem. In many cases, there are other company or department initiatives that target the metrics. Plus, there may be other unrelated interceding factors, like hiring one incredibly productive new sales rep or having a critical piece of machinery break down.

The Phillips Model suggests methodologies to isolate training effects from other factors. One way is to use control groups, for example – pilot your training with a subset of workers then compare any changes to the untrained group.

Alternatively, you can ask the learners – and/or their supervisors – to estimate the impact of the program on output. You can use these estimates directly, but you may gain a more accurate picture by using those conversations to identify other potential sources of change. When possible, you can quantify those impacts and recalculate an isolated training ROI.

There are cases where an effect of training just shouldn't be converted into a dollar amount. To avoid damaging the credibility of your ROI calculation, the Phillips Model separates these out into a complementary category to bolster your evaluation report.

Benefits should be reported as intangibles if:

By including these benefits in a separate section of your report, you maintain the integrity of eLearning ROI while thoroughly conveying all effects.

The Role of xAPI Ecosystems in eLearning Evaluation

The xAPI spec and its ecosystem have opened possibilities for eLearning evaluation that were unthinkable before it was introduced.

For example, instead of relying solely on a smile sheet for Level 1, you can embed xAPI statements into all kinds of eLearning to capture learner "reactions" in real time. Plus, the data you gather through xAPI will be more honest and granular than a survey could ever hope for. You can answer questions like:

Even better, you can capture this data from all learning opportunities, whether it's delivered in an LMS or not. You can pull xAPI data from many informal eLearning tools, from knowledge bases to collaboration platforms to Vimeo. xAPI even makes it easier You can even to capture offline learning.

Additionally, xAPI can provide a bridge between learning data and performance data to make Level 3 and 4 evaluations less onerous. By embedding xAPI statements in tools like Salesforce, you can directly analyze the relationship between training and job performance.

This goes for any business system that requires IT setup: sales enablement, support ticketing, point-of-sale, and inventory systems, just to name a few. Even offline work can be tracked with tools like observational checklists that trainers and supervisors use to validate hands-on skills.

The right authoring tool can not only support xAPI but directly improve eLearning ROI by facilitating more efficient eLearning development.

Our customers tell us all the time about the ways that dominKnow | ONE saves them time and money, including:

Ready to boost your team's efficiency? Contact us today for a free 14-day trial or to speak with one of our experts about how we can help you!

.avif)

Instructional Designers in Offices Drinking Coffee (#IDIODC) is a free weekly eLearning video cast and podcast that is Sponsored by dominknow.

Join us live – or later in your favourite app!

Test drive dominKnow | ONE - start a free trial!

See what dominKnow | ONE can do for your elearning team